Our birthday CTF nearly didn't happen...

We put a lot of effort into testing our CTF platform, but a simple oversight nearly meant we had to call it off.

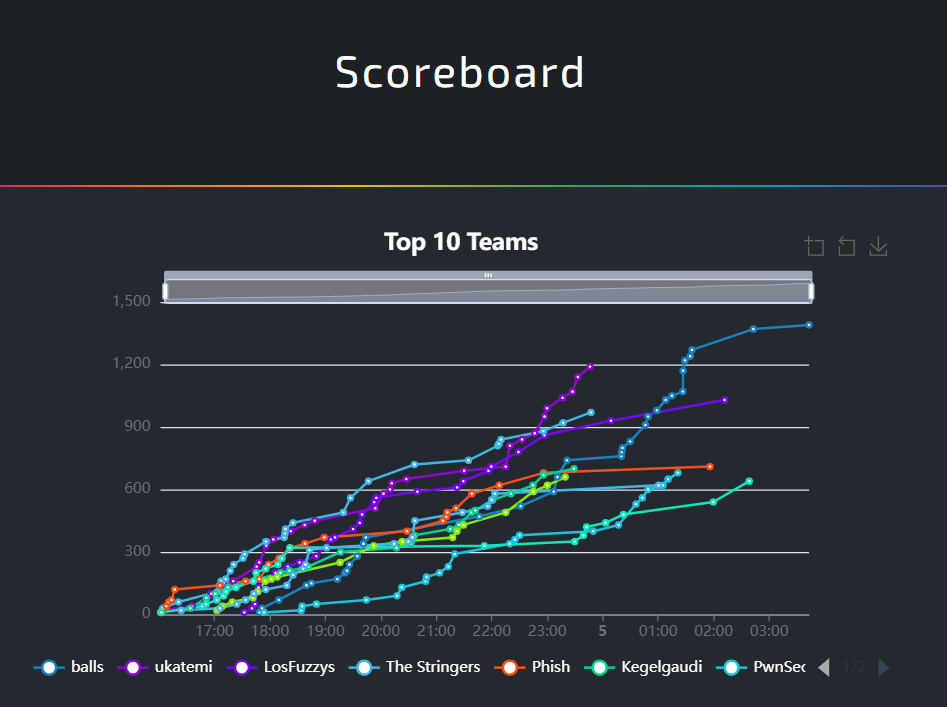

Our 2023 Punk Security Birthday CTF was a huge hit, with fantastic feedback coming in from our players. Our CTF was different to most CTFs you will find over at ctftime because we gave every player their very own lab environment for almost every challenge. This meant we could create some amazing challenges, like full Kubernetes hacks and Jenkins based pipeline attacks.

For one of our challenges, we had a Jenkins server running jobs from a Gitea repository. The Jenkins server AND Gitea server were both built and configured at challenge launch time, alongside two other containers that made access possible.

To make all this possible, we used a heavily modified version of CTFd that spun up (and down) all of our challenges in AWS. We’ve actually open-sourced this work here. Some challenges required an EC2 pool because they ran with permissions that AWS would not tolerate on AWS Fargate.

What is AWS Fargate and what are EC2 Pools?

When running containers in AWS (which we did for all of our awesome challenges) you have a few options.

- AWS EKS (Kubernetes)

You can run containers in EKS, using either the AWS EKS interface or the Kubernetes compliant API (so you can use Kubectl). This is awesome, but adds a layer of complexity you almost never need. - AWS Lambda / Batch

You can run containers in AWS Lambda and Batch as ad-hoc tasks, but these have a defined input and output AND a maximum runtime. - AWS ECS - backed by EC2 Pools

AWS ECS allows you to run containers without having to worry about the management plane, and those containers can run just like any other container you are used to. They get IPs (private or public) in AWS and you can use Security Groups etc as usual. These containers run on EC2 servers in the background. - AWS ECS - backed by Fargate

AWS Fargate removes the need to run EC2 servers. Your containers are now run by AWS. They interact with AWS and the wider world exactly the same, but you no longer see any EC2 servers in your account. There are some limitations though, like not being able to run privileged containers.

With that primer out of the way, we can continue. For most of our challenges, we used AWS Fargate. This meant that we didn’t have to worry about whether we had 100 players or 1000, challenges would just launch on AWS Fargate.

Unfortunately, we couldn’t do this for privileged containers. We needed these for the Docker and Kubernetes challenges, so we had a pool of 200 EC2 servers running to handle these challenges. There is a bit of a risk that a player could privilege escalate all the way out of their container and land on the EC2! We dropped a little Easter Egg on the EC2s but no one found it 😭

We decided that each EC2 would only ever run one challenge and when that challenge was terminated, we would kill the EC2 too. This worked really well, and an Auto-Scaling Group in AWS would just spin us up a new EC2 whenever we killed one. We had about a 30-second period where we were down one EC2.

So what went wrong?

AWS has quotas. Quotas set a maximum for all kinds of things, like the number of public IPs you can have and the number of EC2 instances. We had already done some rough sizing of the EC2 pool and had got ourselves a reasonable quota.

On the morning of the CTF, with 8 hours to go, we realised that our AWS Fargate quota was sitting at 128 containers!

We had been so focused on testing the EC2 pools, scaling them up and down, that we had forgotten all about the AWS Fargate quotas that we had only half increased during development. Our CTF platform itself required 1 x admin container, 8 x Apache Guacamole containers and 8 x CTFd containers. This left us with just 101 containers until we hit the quota.

We immediately raised a quota increase request with AWS and asked for a callback. AWS support is actually really great for getting an immediate callback, but we had a tense few hours as we were initially told that AWS was unsure if it had the burst capacity we needed in the London region.

After a worrying few hours, we had our quota increase and we could rest a little easier.

Lessons learnt

We did almost everything right with the CTF. We peaked at nearly 400 concurrent AWS Fargate tasks and 170 concurrent EC2 servers! That’s a busy aws account!

Our challenges included some amazing technical feats. For two challenges, every player had their own DNS zone and IAM role deployed at launch time.

We even did a zero downtime deployment to fix a bug, replacing the entire CTFd platform without a single player noticing.

Even with all the preparation, testing and redundancy, issues can happen. Fortunately, we have a fantastic team here at Punk Security which means we can adapt and overcome!